Hello,

I apologize for the issues you are facing with your robots.txt file causing multiple errors and impacting your site’s crawling and ranking. Thank you for providing detailed information about the challenges you are encountering.

Based on the information you shared, it seems that the robots.txt file generated by Rank Math is causing timeouts and accessibility issues. Additionally, the hosting restrictions prevent rewriting of robots, leading to further complications.

To address the problems with your robots.txt file, especially the loading issues and redirection inconsistencies, we recommend the following steps:

-

Check for Existing robots.txt Files:

- Verify if there are any conflicting robots.txt files in your site’s root directory that might be causing issues. If found, delete them as mentioned in our guide here.

-

Adjusting robots.txt for WWW Version:

- Since your robots.txt loads with the WWW version but not without it, you may need to ensure that the robots.txt file is accessible without WWW. You can create a separate robots.txt file for the non-WWW version and set up proper redirection rules in your hosting environment.

-

Improving Crawling Frequency:

- To enhance search engine crawling frequency, consider submitting your updated robots.txt file to Google Search Console and other search engine webmaster tools. This can help expedite the crawling process.

-

Investigating Webmail Robots.txt Issue:

- The presence of a strange robots.txt file related to webmail might also be affecting crawling. Ensure that this file is correctly configured or removed if unnecessary.

Unfortunately, due to the hosting limitations on rewriting robots and the complexity of the issues you are facing, it might require further investigation and assistance from your hosting provider or a developer to implement the necessary changes effectively.

Regarding the last crawl dates you mentioned, it’s crucial to resolve these robots.txt issues promptly to ensure proper indexing and ranking of your site’s content.

If you need additional help or encounter any challenges while addressing these problems, please update this ticket, and our support team will assist you further.

Thank you for your patience and cooperation as we work towards resolving these robots.txt issues for your website.

Best regards.

Sources:

Common robots.txt Issues & How to Fix Them » Rank Math

How to Fix the ‘Blocked Due to Other 4xx Issue’ Error » Rank Math

Hello,

Thank you for contacting Rank Math support.

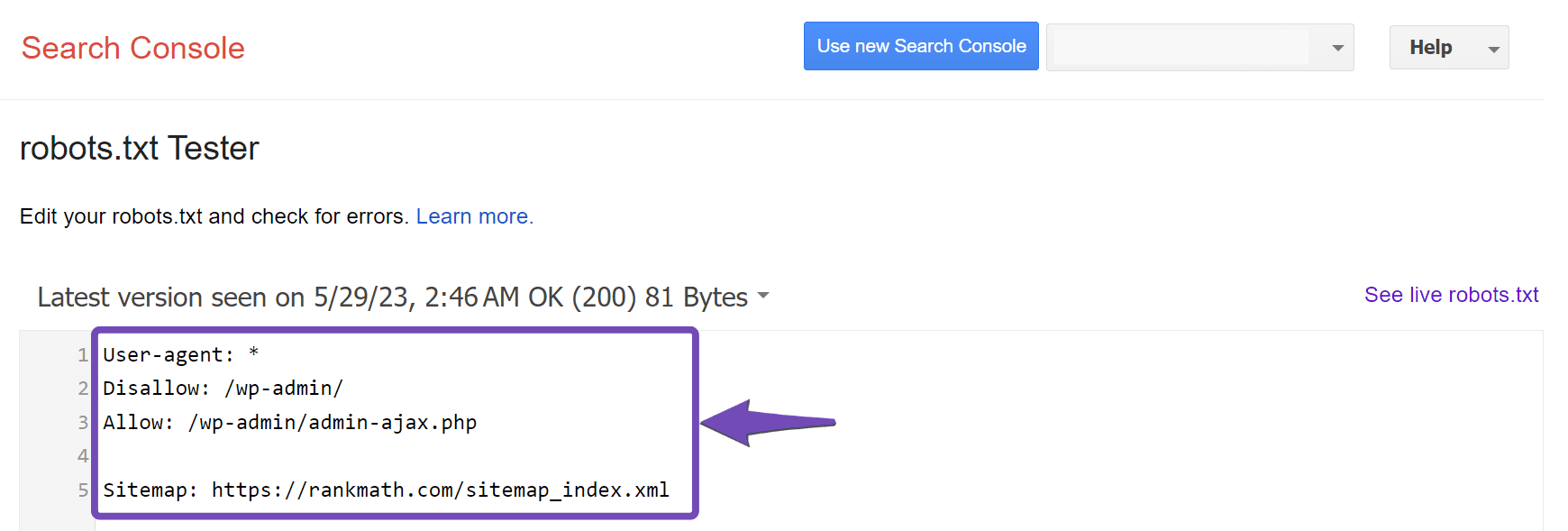

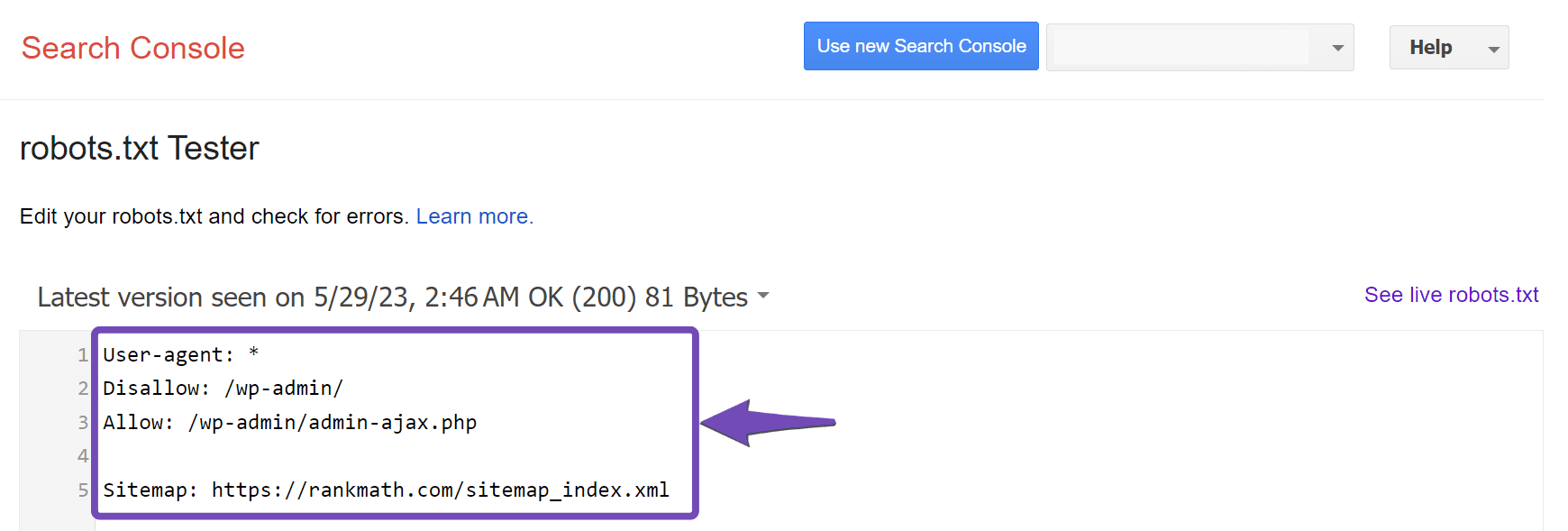

I can check that your robots.txt file is working fine:

Please make sure that you only submit the https://giocone.com/robots.txt to your GSC account.

Hope that helps and please do not hesitate to let us know if you need our assistance with anything else.

For Bluehost hosting I have neither the robots.txt nor the sitemaps.

Hello,

Please note that Rank Math does not generate or create a physical robots.txt or XML sitemap file in your website files as it is created virtually/dynamically.

If those files are present in your root directory, then Rank Math is unable to generate or edit those files.

Looking forward to helping you.

Hello,

Since we did not hear back from you for 15 days, we are assuming that you found the solution. We are closing this support ticket.

If you still need assistance or any other help, please feel free to open a new support ticket, and we will be more than happy to assist.

Thank you.