-

Sitemap could not be read on GSC.

It is fine on the vaildator and loads fine. I have purged website cache.

-

Hello,

We apologize for the issue you are facing with your sitemap not being read in Google Search Console (GSC). Since your sitemap loads fine on the validator and you’ve already purged the website cache, here are a few additional steps you can take to troubleshoot this issue:

-

Flush Permalinks: Sometimes, simply flushing permalinks can resolve issues with sitemaps. Go to your WordPress dashboard, navigate to Settings → Permalinks, and click Save Changes without making any changes. This can help refresh the permalink structure.

-

Check Sitemap URL: Ensure that the sitemap URL you are submitting to GSC is correct. It should typically look like this: `https://rankmath.com/kb/fix-sitemap-issues/.

-

Rename the Sitemap File: If the issue persists, try renaming your sitemap file in GSC. Go to Indexing → Sitemaps in GSC and submit a new sitemap URL like

https://yourdomain.com/?sitemap=1. -

Validate Your Sitemap: Although you mentioned it passes validation, double-check using the Validate XML Sitemap tool to ensure there are no hidden issues.

-

Check Server Configuration: If you’re using Apache, ensure that your

.htaccessfile has the correct rewrite rules for the sitemap. You can refer to our guide on adding rewrite rules if needed. -

Exclude Sitemap from Caching: Make sure that your caching plugin is not caching the sitemap. You can find instructions on how to exclude sitemaps from caching in our guide.

If you’ve tried all these steps and the issue still persists, please reply to this ticket with your site URL so that a human agent from our support team can assist you further. Thank you for raising this concern!

Sources:

Rank Math Sitemap Issues and Their Fixes » Rank Math

Troubleshooting the “Couldn’t Fetch” Error in Google Search Console » Rank Math

Why the Sitemap_Index.xml is Throwing a 404 Error » Rank Math

How To Exclude Rank Math Sitemaps from Caching » Rank Math1. I did already

2. The sitemap URL example you provided doesn’t look right. I am submitting is correct:

/sitemap_index.xml (https://speedyhomebuyer.co.uk/sitemap_index.xml)

3. I am unable to rename my sitemap as it was generated by you and you don’t offer that functionality.

4. Already checked it using Validate XML Sitemap tool

5. Done

6. DoneHello,

Thank you for contacting us. We apologize for any inconvenience this issue may have caused.

Please follow the steps below:

1. Flush the Sitemap cache by following this video screencast:

https://i.rankmath.com/pipRDp2. Exclude the Sitemap files of the Rank Math plugin in your caching plugin. The cache could be via a plugin or from the server. For plugins or Cloudflare, please follow this article:

https://rankmath.com/kb/exclude-sitemaps-from-caching/3. Apply the following filter code to your site.

add_filter( 'rank_math/sitemap/enable_caching', '__return_false');Here’s how you can add filter to your WordPress site:https://rankmath.com/kb/wordpress-hooks-actions-filters/

4. Remove all your sitemaps from your Google Search Console and re-submit only the main sitemap (

https://website.com/sitemap_index.xml)5. If the issue persists, please try removing all your sitemaps from your GSC account again and try submitting the following:

https://website.com/?sitemap=1.Let us know how that goes. Looking forward to helping you.

I have completed all the above and still no luck. Please can you do some investigating to help figure out what is causing this?

As previously stated, I can’t submit /?sitemap=1 as an alternative as when I ran this through a vaildator it failed.

I understand that Rank Math (and many SEO systems) often set X-Robots-Tag: noindex on sitemap files by default, as a measure to prevent the sitemap itself from being indexed. That is typically considered acceptable behaviour, and many documentation threads from your team affirm this.

However, in my case, this noindex header appears to be interfering with Google Search Console’s ability to discover child URLs. My GSC report currently shows “0 discovered URLs”—which is not consistent with a sitemap that is properly readable.

The server error logs show that Rank Math is trying to query a missing database table called rank_math_analytics_objects, which is part of its Analytics module. This means the Analytics feature isn’t fully installed or has become corrupted, causing repeated database errors every time a page loads. While it may not directly affect normal SEO output, it can interfere with how Rank Math generates data—potentially including sitemaps or headers—so it should be repaired or the Analytics module disabled until fixed.

Please help me investigate these issues further.

Hello,

We checked your sitemap but were not able to find any issues there. In this case, please allow Google some time after submitting the sitemap on GSC to crawl and update the status.

You can monitor the “Last read/crawl date” column in the sitemap index, as it will update the date when Google crawls your sitemap.

Please note that each time Google uses a sitemap to find a URL, the count of the Discovered URLs increases by one. Sitemaps are only one of the few methods Google uses to discover your URLs.

When the count of the Discovered URLs is zero or does not match your actual sitemap in your Google Search Console, it means Google didn’t use the sitemap to find the URLs.

Here’s a link for more information: https://rankmath.com/kb/zero-discovered-urls-through-sitemap/

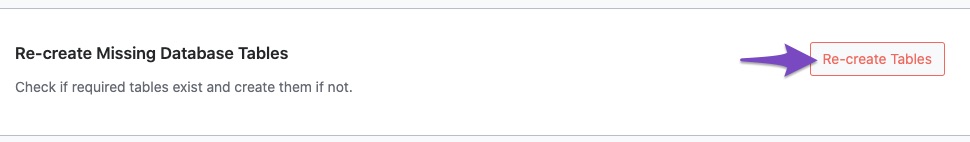

Regarding the missing database table, please head over to your WordPress Dashboard > Rank Math > Status & Tools > Database tools and click on the button “Re-create Tables”.

You may also refer to this guide as well: https://rankmath.com/kb/recreate-missing-database-tables/

Hope this helps, and please do not hesitate to let us know if you need our assistance with anything else.

Thank you.

Thanks for checking this over for me.

I’ve been troubleshooting this for a while, and although the sitemaps validate fine and return 200 OK when viewed in the browser, they continue to show “Couldn’t fetch” in Google Search Console.

After running KeyCDN performance tests, I found that:

The sitemap URLs return 200 OK from the UK and Europe.

They return 401 Unauthorized from the US and Asia.

That makes me think the issue is at a server or firewall level, where requests from non-European regions (like Googlebot’s US IP ranges) are being blocked.

Could you please confirm whether Rank Math’s sitemap generator handles regional requests any differently, or if you’d also consider this a server-side access restriction rather than a plugin or configuration problem?

I just want to be sure I’m not overlooking anything before asking the host to whitelist Googlebot IP ranges globally.

Thanks again for your help — this one’s been a bit of a puzzle!

Hello,

Rank Math’s sitemap generator does not handle requests differently based on region — it serves the same sitemap globally.

From what you’ve described, this issue is not caused by Rank Math but rather by a server or firewall-level restriction that’s blocking requests from certain regions, including Googlebot’s US IP ranges. We recommend asking your hosting provider to review and whitelist Googlebot and other legitimate crawler IPs to ensure your sitemap is fully accessible.

We hope this helps. Please let us know if you have further questions or concerns.

Hi team,

I’m following up regarding the sitemap fetch issue that’s been ongoing since the summer.

After extensive testing over the last few months — including multiple rounds of troubleshooting with my developer and hosting provider — we’ve now completely ruled out server-level restrictions, firewalls, or caching as the cause. All sitemap URLs return 200 OK globally and validate without issue.

The only consistent variable left is the X-Robots-Tag: noindex header that Rank Math adds to sitemap files by default.

I understand this is intentional behaviour, but in our case it appears to be preventing Google from reading the sitemap index and child sitemaps. The “Couldn’t fetch / General HTTP error” message in Google Search Console occurs immediately after Google encounters this header.

To recap what has already been done:

– Server firewall (StackProtect) disabled — confirmed global 200 OK responses.

– Cache and CDN layers flushed and bypassed.

– Rank Math cache disabled via rank_math/sitemap/enable_caching filter.

– Sitemaps tested via direct URL, validator, and header inspection — all valid, consistent XML.

– Tested under both Domain property and HTTPS URL prefix properties in Search Console — both produce identical fetch errors.At this point, it appears that the X-Robots-Tag: noindex directive is being misinterpreted by Googlebot and causing the sitemap to fail processing.

Could you please escalate this for a technical review by your development team and confirm whether there is a supported way to disable or override the X-Robots-Tag header for sitemap files?

Given the length of time this issue has persisted and the amount of time both myself and the developer have invested in diagnosing it, we’d really appreciate a more definitive resolution or a plugin-level adjustment to allow users to manage this header.

Thank you again for your time — this is now the only unresolved link in the chain.

Best regards,

Lucy HoadHello,

We can assure you that having a

X-Robots-Tag: noindexheader in the sitemap shouldn’t cause Google to not read or fetch it.XML Sitemaps are designed to be crawled by search engines, not indexed. The

X-Robots-Tag: noindexdirective ensures that the sitemap itself does not appear in search results, but this does not prevent Google from crawling its contents and indexing the pages listed within it.There’s no need to worry, as this is the expected behavior for sitemaps.

However, if you still wish to remove the noindex tag from your sitemap for any specific reason, you can use the filter in your site:

add_filter( 'rank_math/sitemap/http_headers', function( $headers ) { if ( '/sitemap_index.xml' !== $_SERVER['REQUEST_URI'] ) { return $headers; } unset( $headers['X-Robots-Tag'] ); return $headers; } );Please note that not all sitemaps are processed by Google. Not necessarily because there is something wrong with the sitemap. Even a perfect sitemap may not be processed by Google.

Most of the time, it’s still sitting in the queue waiting for processing. Remember, Google is processing millions of sitemaps daily, and sometimes it takes time to get to yours. But for whatever reason, Google never gets around to some sitemaps. It just doesn’t think it is worth it.

And Search Console sometimes shows ‘Pending’ as ‘couldn’t fetch’ incorrectly. You can check the replies of Google experts for the Couldn’t Fetch (especially for the TLDs except for .com) issues on the following threads:

https://support.google.com/webmasters/thread/29055233/missing-xml-sitemap-data-in-google-search-console-for-tld-domain?hl=en

https://support.google.com/webmasters/thread/31835653/sitemap-couldn-t-fetch-sitemap-couldn-t-be-read-by-google-search-console?hl=enPlease share an updated screenshot from your Sitemap report in Google Search Console. This will help us check the exact issue and guide you further.

Looking forward to helping you.

Hello,

Since we did not hear back from you for 15 days, we are assuming that you found the solution. We are closing this support ticket.

If you still need assistance or any other help, please feel free to open a new support ticket, and we will be more than happy to assist.

Thank you.

-

The ticket ‘Sitemap could not be read’ is closed to new replies.