-

Hello Rank Math Support,

I am experiencing ongoing issues with Google indexing specific pages on my site due to a persistent “noindex” detected in the HTTP header “X-Robots-Tag.” I’ve worked through several troubleshooting steps already, but the issue continues, particularly with specific sitemaps. Here’s a full summary for your review:

Indexing Issue: The main sitemap, can be indexed by Google. However, some sub-sitemaps, are displaying a “noindex” error, which prevents them from being indexed correctly.

Steps Taken:

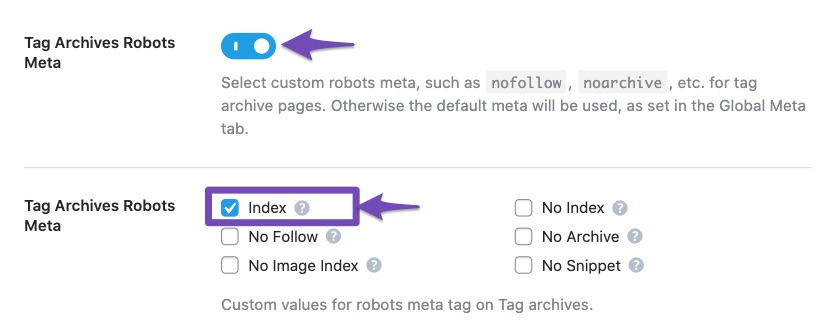

1. Verified Rank Math Settings: Double-checked the Titles & Meta settings in Rank Math to ensure no pages are set to “noindex.”

2. Modified .htaccess File: With Cloudways support, we added the line Header set X-Robots-Tag “index, follow” to the .htaccess file as suggested in your documentation. However, the noindex error persists.

3. Checked Robots.txt: Confirmed that no disallow tags are affecting the pages in question in the robots.txt file.

4. Confirmed Server Configuration: Cloudways support confirmed our stack includes both Apache and Nginx. They verified that there is no noindex tag in the Apache configurations, and they reported no Nginx-specific header or directive that would be causing this.

Despite these steps, Google still cannot index the pages in the affected sub-sitemaps and continues to show the “noindex” error in the HTTP header.

Could you please review this information and let me know if there are any additional steps or Rank Math-specific configurations that could help resolve this issue?

Thank you very much for your time and assistance.

The ticket ‘Persistent “noindex” Detected in HTTP Header for Specific Sitemaps’ is closed to new replies.