-

Should I add a fully permissive robots.txt and fix the errors on Search Console? More than 900 errors are impossible to correct!

-

Hello,

Thank you for your query and we are so sorry about the trouble this must have caused.

When you say impossible to correct, can you please let us know the details? Please share some examples and the details of the errors that made it impossible to correct.

If you intend to prevent Search Engines from discovering these URLs, then you can simply add disallow rules for these URLs to prevent search engines from crawling them.

After that, mark these errors as fixed to help improve your site’s health.

Looking forward to helping you.

For a year and a half I have validated the URLs without verifying the URLs. Lately I’ve found that it was Verifying the URL on the failing pages was a critical step.

I didn’t understand Search Console, despite the many guides I read and the questions I asked.

Now I have corrected the posts page, because I had removed it from the WordPress settings and I had forgotten to insert the page again. In fact many posts cannot be indexed. I think I will solve my problems with Search Console briefly.

Hello,

Several settings in WordPress and Rank Math control the indexing of posts and pages. You need to make sure that these settings are configured correctly to allow search engines to index them.

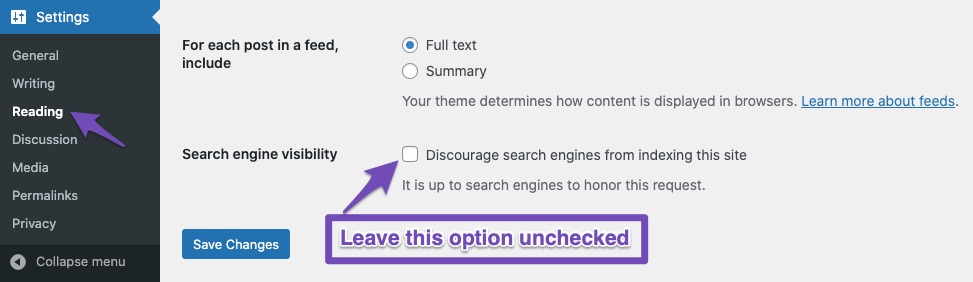

Please check the Search Engine Visibility settings in WordPress. Please go to WordPress Dashboard > Settings > Reading and ensure that the checkbox labelled ‘Discourage search engines from indexing this site’ is unchecked, as shown in the screenshot below:

Also, check WordPress Dashboard > Rank Math > Title & Meta > Post/Page and confirm that the Robot meta is set to index.

Please share your findings on Google Search Console so we can assist further.

Let us know how that goes and don’t hesitate to get in touch if you have any other questions.

Thank you.

I have some settings for the robots meta turned off from the main option. Posts and pages.

https://imgur.com/a/k3eXLZY

https://imgur.com/a/NQPkTOYI chose to set the categories on index because they are useful for online games.

https://imgur.com/a/SUckxpPFinally, for Attachments and tags on noindex.

https://imgur.com/a/LLNk8uw

https://imgur.com/a/qjmLQB5Wordpress discouragement is disabled.

https://imgur.com/a/S52aDuzFor Search Console, I think the procedure I previously described is correct, but since I discovered how to do it I had an error for static pages. I forgot to insert the games page again. Now I wait for the validations and see if there will be improvements.

https://imgur.com/a/ZzSRwmFBut the thing that amazes me the most is how damn difficult it is to get results on search engines. Because they exclude my results. I noticed this oddity because there were few results for URLs or images and so even if my work would have been terrible, it would have been last. It wasn’t even among the URLs excluded from the results. So I know for a fact that it wasn’t a positioning or high competition issue. But 4 years have passed. I’m sure I did a good job.

Hello,

It appears you are on the right track by making the corrections. Continue monitoring the validation process in Google Search Console to see if the errors get resolved.

Also, consider posting your issues on Google Webmaster Tools community forums for additional insights and suggestions from other webmasters.

It can indeed be challenging to get results on search engines, especially with the evolving SEO landscape. However, with consistent efforts in optimizing your site’s content, structure, and settings, you can improve your site’s visibility over time.

We hope this helps.

The biggest obstacle is getting the pages to appear without them being removed by Google. Then doing better SEO shouldn’t be too big of an obstacle. Compared to 3 months ago, when I achieved some slight improvements, the site should rank better than in the past.

Unfortunately google support is of no help. They share their guides, but they don’t say exactly what the problem is that is plaguing me. We’ll see how it goes!

Hello,

We understand how frustrating it can be when your efforts don’t yield the expected results. Here are some additional steps you can take regarding this issue:

– Revisit Google’s Webmaster Guidelines to ensure that your site complies with all their policies. Sometimes, seemingly minor violations can lead to pages being deindexed.

– In Google Search Console, navigate to the “Manual Actions” report under “Security & Manual Actions” to see if any manual penalties have been applied to your site.

– Consider implementing advanced schema markup to help Google understand your content better. Rank Math PRO makes it easy to add and customize schema types for your pages.

SEO improvements take time, and it’s crucial to be patient while consistently applying best practices. Continue monitoring your progress and making adjustments as needed.

Unfortunately I continue to have serious problems with the fix on Search Console. Do I put a permissive robots.txt, do the validations and then put the robots.txt I need again?

Hello,

If the fix is not validated on the Google Search Console it implies that Google tried to confirm your fixes but still found the issues.

Since the validation failed can you share a screenshot of the URL (s)/ issues that Google stated caused the validation to fail? Please click the See details on the issue details page.

Looking forward to helping you.

Thank you.

I made the robots.txt permissive again and added the sitemap.

I only got results this way on the SERP. I’ll likely fix Search Console problems as well. I’ve been saying for 4 years there’s something blocking me about search engines and their webmaster tools, but no one believes me!

Hello,

Some of the static pages are indeed not indexed on the SERP.

We suggest following this guide to help you address that: https://rankmath.com/kb/discovered-currently-not-indexed/

Looking at these pages, they are correctly configured and the canonical URLs are pointing to the proper version and there’s no redirection happening. In this case, it is possible that Google hasn’t seen these changes.

The last option for you is to contact Google community to give you further insights of the situation.

As for the amp pages, if the AMP functionality is no longer available on the site, the URLs with ?amp=1 will have the canonical URL of the correct page (original), and Google will report the errors if they have found those URLs. However, the error will not cause any issues with the indexing of the correct URLs. If you redirect them, then Google will report the Page with Redirect error.

If you don’t want Google to crawl the

?amp=1URLs, then you should use the following rule in your robots.txt file:Disallow: *?amp=1*Looking forward to helping you.

Hello,

Since we did not hear back from you for 15 days, we are assuming that you found the solution. We are closing this support ticket.

If you still need assistance or any other help, please feel free to open a new support ticket, and we will be more than happy to assist.

Thank you.

The ticket ‘How do I validate URLs that are impossible to correct?’ is closed to new replies.