Hello,

We apologize for the confusion you’re experiencing with the "Blocked by robots.txt" issue. It sounds like there may be some unintended rules in your robots.txt file or possibly a plugin conflict that is causing Ahrefs to report these pages as blocked, even though Google has indexed them.

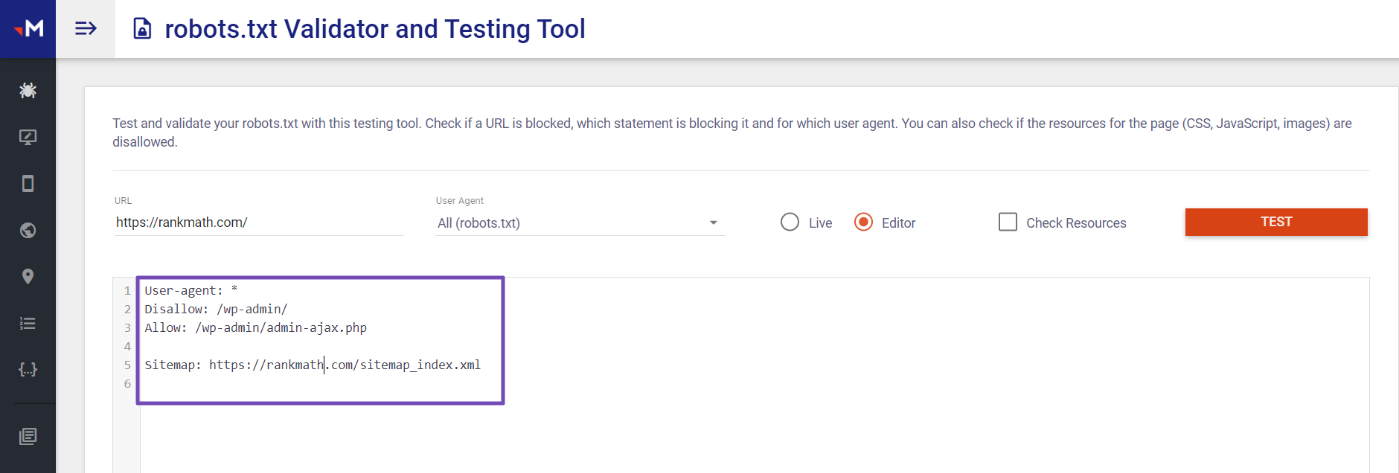

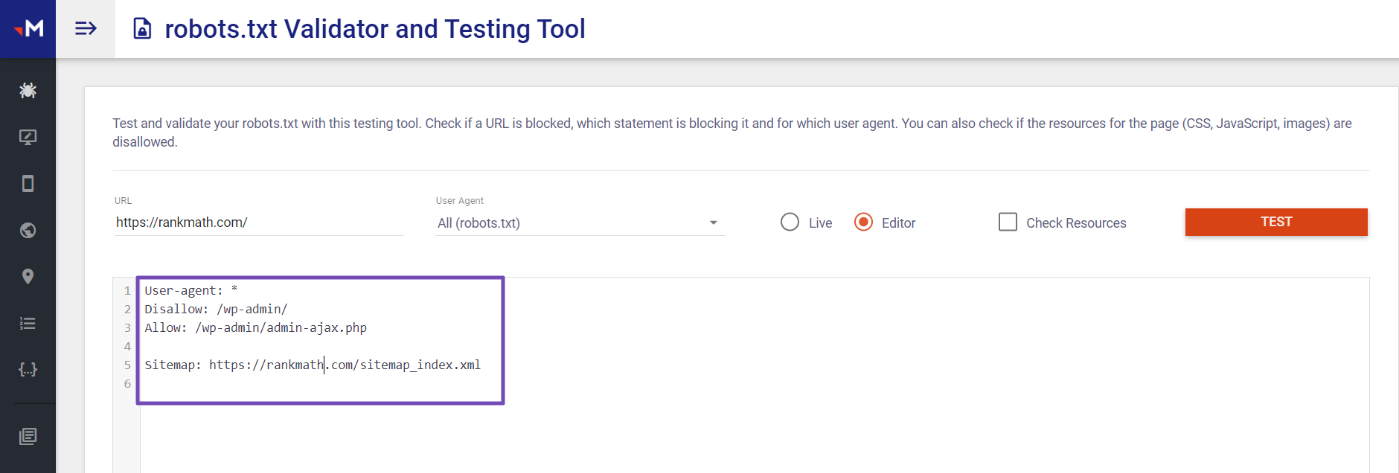

To troubleshoot this, we recommend checking your robots.txt file to ensure that it does not contain any rules that might be blocking access to those pages. You can do this by navigating to Rank Math SEO → General Settings → robots.txt and reviewing the directives there. Additionally, you can use the Robots Testing Tool to see how your current robots.txt file is being interpreted by crawlers.

Regarding the URLs ending with numbers (like /page/7/), these are typically generated by WordPress for paginated content, such as blog posts or archives. If you want to prevent these pages from being indexed, you can adjust your settings in Rank Math:

- Go to Rank Math SEO → Titles & Meta.

- Under the Archives tab, you can set the pagination settings to "noindex" if you do not want search engines to index these pages.

This should help reduce the number of paginated pages that are available for indexing.

If you continue to face issues or have further questions, please reply to this ticket, and a human agent from the Rank Math Support team will assist you further. Thank you for raising your concerns!

Sources:

How to Fix ‘Blocked by robots.txt’ Error in Google Search Console » Rank Math

Common robots.txt Issues & How to Fix Them » Rank Math

Also.. I did check Titles & Meta and the “Noindex Empty Category and Tag Archives” option is toggled ON.

Also, I just figured out that it’s not the source URLs that are being blocked, it’s the “Target URL” (see attached)… With that being said, does it hurt SEO to set these /tag/ URLs to no-follow? I mean, what’s the best thing to do here? Thank you!

https://www.dropbox.com/scl/fi/je71b0pcer8rn26gxfqy6/image.png?rlkey=4znnz02dq0yo6xdi93799w1ps&dl=0

Hello,

Thank you for contacting Rank Math support.

We can confirm that your robots.txt rules are actually blocking your /tag/ URLs, see this screenshot below:

In this case, if you do not want it to be blocked, you can remove that rule. Blocking or not blocking the /tag/ page would not affect your SEO but if your tag pages are not unique, that would affect your SEO – and if the tags page are unique with quality keyword-rich content, that can also help with your keyword ranking.

Lastly, the URL (/blog/page/7/) is a pagination page of your blog page, it is generated automatically by WordPress when your blogs cannot contain one page.

We hope this helps clarify the issue. Please let us know if you have any other questions or concerns.

Hello,

We are glad to hear that this issue has been resolved. Thank you for letting us know. This ticket will be closed now, but you can always open a new one if you have any other questions or concerns. We are here to help you with anything related to Rank Math.

We appreciate your patience and cooperation throughout this process.

Thank you for choosing Rank Math.